Efficient rendering of soft particles on mobile GPUs

Analyzing depth of scene fragments is an important part of composing effects like soft particles. However, this can easily become a bottleneck on mobile devices. In this article I’ll explain what OpenGL ES extensions can be used to substitute depth pre-pass on different mobile GPUs, and also will cover some additional optimizations which were applied to improve our apps.

As in previous articles, I use our 3D Buddha Live Wallpaper as a benchmark because this app uses soft particles, and overall scene is quite simple.

Explanation of problem

Scene depth information is an important part of composing effects like soft particles. However, the classic depth render-to-texture depth pre-pass approach uses a lot of memory bandwidth because it requires writing of a depth buffer from GPU to external memory, and then reading this texture for each fragment of particles. Deferred rendering to single and even multiple render targets is a commonly used technique for powerful desktop/console GPUs, however on mobile devices this may easily become too expensive. This approach requires reads and writes of large uncompressed textures which increases power usage and may even hit the GPU-to-memory bus bandwidth limit if the scene and shaders are already quite complex.

Fortunately, mobile GPU vendors have invented some ways of eliminating the necessity to use render-to-texture pass completely. In this article I’ll cover the ways we’ve improved performance and power efficiency of our live wallpapers.

Depth fetch in fragment shader

Certain GPUs support ARM_shader_framebuffer_fetch_depth_stencil OpenGL ES extension. When enabled, it allows the fragment shader to read current values of depth and stencil buffers. This eliminates the necessity of rendering scene depth information into separate texture.

Currently this extension is supported by Mali GPUs (Bifrost 2nd gen and newer), and modern Adreno GPUs (5xx, 6xx series). These values are conveniently available in additional read-only built-in GLSL variables gl_LastFragDepthARM and gl_LastFragStencilARM. Because of the simplicity of extension, modification of shader is straightforward and really easy — all I had to do was remove depth texture fetch and use value from variable.

Visually there was no difference between render-to-texture and depth fetch pipelines. And the app was running at the same steady 60 fps.

This is why it is very important to run a profiler even if the visual quality is great and the app runs smoothly. Because the scene is not too complex and modern mobile GPUs are powerful enough, non-optimized rendering pipeline provided decent performance. However, reducing GPU load means that the system can draw more UI elements over live wallpaper, and reducing memory bandwidth means less overall power usage. And when the system is lightly loaded, CPU/GPU clocks are kept low and this improves thermal efficiency.

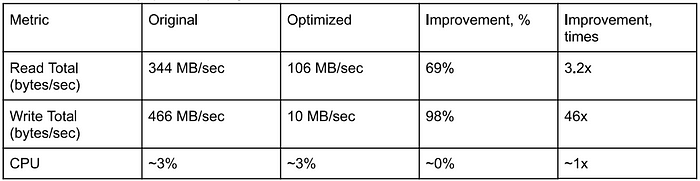

As expected, results from profilers unveiled significantly reduced memory bandwidth.

3D Buddha Live Wallpaper. Snapdragon Profiler, Pixel 3:

3D Buddha Live Wallpaper. ARM Streamline, Galaxy A21s:

These profilers provide different metrics but you can see that overall memory bandwidth is drastically reduced, especially for write operations. On Exynos chipset, even CPU load is slightly reduced. This indicates that OpenGL drivers overhead is decreased because optimized pipeline is effectively a single-pass rendering.

Pixel Local Storage

This is a more complex but more flexible approach. It requires extension EXT_shader_pixel_local_storage, which is available on modern PowerVR and Mali GPUs. This extension introduces the concept of pixel local storage. Because GPUs supporting this extension use tiled rendering, this allows to store arbitrary per-fragment information within each tile. This information is stored in fast on-chip tile memory and isn’t copied to the framebuffer. Because of this, access to it is lightning fast and doesn’t introduce additional memory bandwidth.

Usage of Pixel Local Storage is more complex than the one using depth fetch, and it requires refactoring of the rendering pipeline. I will cover how to do this in a separate article. Currently our live wallpapers don’t support rendering of soft particles using Pixel Local Storage, it is still work in progress.

Extra — shader math optimization

Additionally, the depth linearization function has been optimized to use less math. This function is called on each fragment of soft particle so its optimization is important.

Old function accepted near and far planes in vec2 uCameraRange uniform:

uniform vec2 uCameraRange;float calc_depth(in float z) {

return (2.0 * uCameraRange.x) / (uCameraRange.y + uCameraRange.x - z*(uCameraRange.y - uCameraRange.x));

}

As you can see, some values are identical for each fragment and therefore can be pre-calculated on CPU and passed as ready-to-use values.

New function accepts a vec3 uCameraRange with pre-calculated values:

// x = 2 * near; y = far + near; z = far - near

uniform vec3 uCameraRange;float calc_depth(in float z) {

return uCameraRange.x / (uCameraRange.y - z * uCameraRange.z);

}

Shaders compilation showed the following results:

PVRShaderEditor, when targeting Series 6 GPUs showed that both shaders used the same 22 cycles, so in this particular case optimized math is not critical for PowerVR GPUs.

Mali offline shader compiler, targeting Bifrost GPUs, showed some improvements:

Uniform registers count reduced from 16 to 12 (both values are well within hardware limits but the lower, the better).

Additionally, this compiler detects such unoptimized code and generates “Has uniform computation: true” warning for old shader and doesn’t detect such issue with the new one.